The IIS Chip Gallery

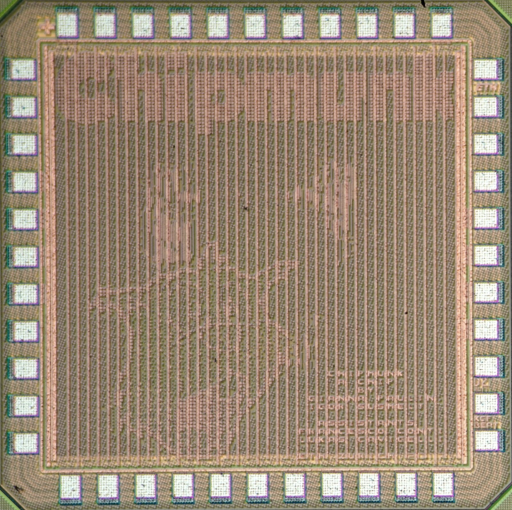

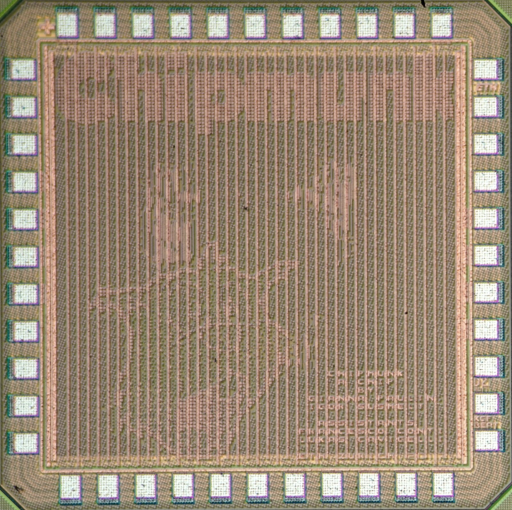

Chipmunk (2016)

Additional pictures below, click to see larger versions

by

| Application | Machine-learning |

| Technology | 65 |

| Manufacturer | UMC |

| Type | Semester Thesis |

| Package | QFN40 |

| Dimensions | 1252μm x 1252μm |

| Gates | 600 kGE |

| Voltage | 1.2 V |

| Power | 29.03 mW @ 1.24 V, 168 MHz |

| Clock | 168 MHz |

Chipmunk is a small (<1 mm^2) hardware accelerator for long short-t erm memory (LSTM) recurrent neural networks (RNNs) in UMC 65nm tech nology capable to operate at a measured peak efficiency up to 3.08 Gop/s/mW at 1.24mW peak power. Chipmunk is a multi-chip systolicall y scalable hardware architecture for long short-term memory (LSTM) recurrent neural networks (RNNs). The accelerators keep all paramet ers stationary on every die in the array, drastically reducing the I/O communication to only loading new features and sharing partial results with other dies.

When Chipmunk was taped-out, RNNs were state-of-the-art in voice aw areness/understanding and speech recognition. On-device computation of RNN on low-power mobile and wearable devices was key to applica tions such as zero-latency voice-based human-machine interfaces.

In this work we present Chipmunk which is an implementation of such an LSTM network on a chip. Furthermore, we show that by using a systolic architecture our chip is very easily scalable in both the amount of layer and the amount of hidden units. We evaluated our chip for a grid of 4 chips working together on computing a speech recognition LSTM network with 192 hidden units and 1 layer. The ASIC implementation shows an increase in power efficiency of around 200 compared to a FPGA implementation. This chip could offer embedded and low-power speech recognition on a device such as a smartwatch.

Why call this chip chipmunk? Well Igor and Gianna are really afraid of chipmunks, and during this project they wanted to conquer their fears. Chipmunk is a small rodent that is from the squirrel family. This website has some information on how to distinguish chipmunks from squirrels. However, the website is for North America, and does not deal with Asiatic Striped Squirrel which is another close relative, but once again slightly different in the way they are striped.